Measuring Workshop Effectiveness: Instrument Design & Evaluation

Overview

In early 2022, I was contracted to lead the design, development, and implementation of a comprehensive evaluation framework for a 4-day Social and Behavior Change Communication (SBCC) workshop. The evaluation included both process and outcome components to assess workshop delivery quality and participant learning outcomes.

Approach

For the process evaluation, I developed an 16-item Likert-scale survey to assess perceived quality of the workshop focusing on participant satisfaction and engagement, facilitator expertise, identify strengths and areas for continuous improvement, workshop relevance with participants’ needs and expectations to offer a mechanism for participant feedback to shape the workshop experience in real-time.

For the outcome evaluation, I developed an 16-item Likert-scale survey specifically drawing on constructs from Bandura’s Social Cognitive Theory. This theory-driven approach ensured that the measurement instrument captured participants' knowledge, skills, confidence, and perceptions in a systematic and valid manner.

Methods

Process Evaluation

Data on implementation quality and participant engagement were collected through the daily 16-item survey, facilitator observations, attendance records, and anonymous participant feedback forms administered throughout the workshop. This allowed monitoring of adherence to planned activities and identification of strengths and challenges in workshop delivery.

Outcome Evaluation

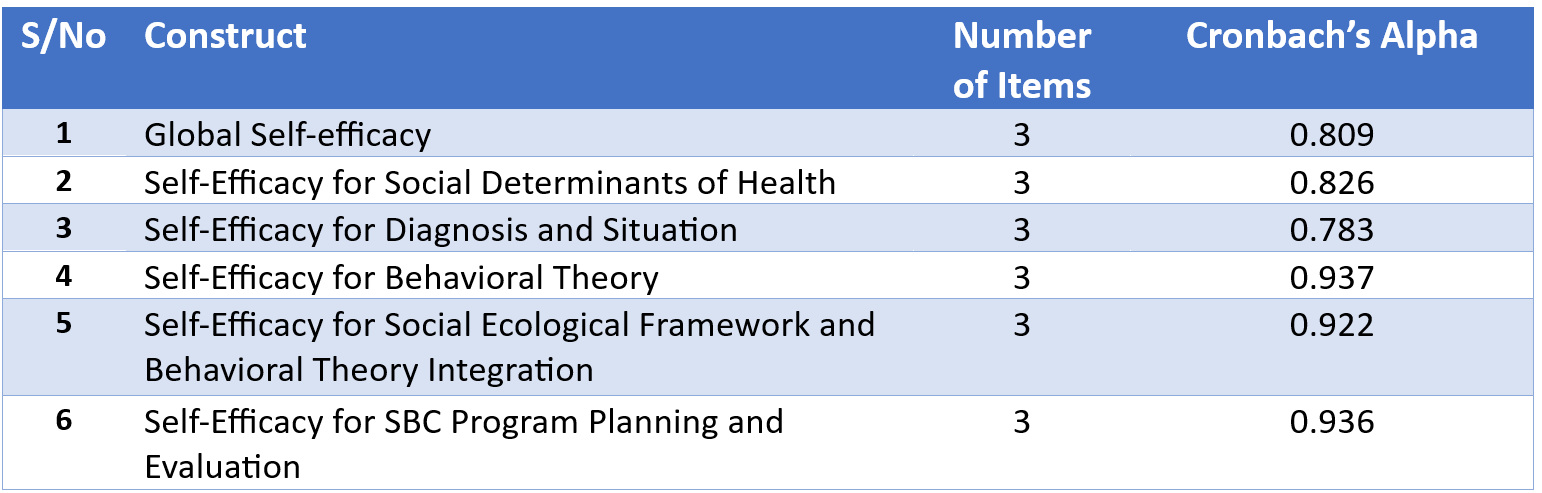

Data was collected through the survey which measured self-efficacy across six constructs relevant to SBCC strategic planning competencies. Each construct included three items focused on assessing participants’ confidence, knowledge, and skills. The survey was administered digitally before and after the workshop to quantify changes in self-efficacy.

Analysis & Tools

All surveys were administered through Google Forms. Data were then exported into Excel for data cleaning and management. Responses were anonymized and descriptive and inferential analyses were conducted through SAS on aggregated data for both components of the evaluation.

Process Evaluation

A total of 47 responses across 4 days were collected. I calculated mean scores for each item, and the averages of each construct were used to calculate mean scores for the different dimensions of perceived quality. These were then averaged across days to derive overall daily perceived quality scores.

Outcome Evaluation

A total of 12 responses at pre and post test were collected. I calculated Cronbach’s Alpha coefficients to assess the internal consistency and reliability of the scales, which ranged from α= 0.78 to 0.94, indicating excellent reliability.

To test for statistically significant changes in self-efficacy scores before and after the workshop, I conducted paired sample t-tests using SAS. These tests helped identify meaningful improvements attributable to the workshop’s theory-driven curriculum.

Results

Process Evaluation

Participant Engagement (avg. 4.60) and Facilitator Expertise (avg. 4.73) were the highest scoring dimensions, highlighting effective engagement techniques and strong facilitator knowledge.

Participants consistently rated sessions as aligned with the agenda and relevant to learning objectives.

Modules and sessions were perceived as well-structured and organized.

Workshop logistical arrangements, including venue, audio-visual setup, seating, and refreshments, were generally rated positively.

Outcome Evaluation

Analysis revealed statistically significant improvements (p < 0.05) across all six self-efficacy constructs from pre-test to post-test. Below are the three strongest improvements observed:

Behavioral Theory Competence: Participants showed the greatest improvement in understanding and applying behavioral theories, with mean scores rising from 3.00 to 4.19.

Integration of Social Ecological Framework and Behavioral Theory: Confidence in developing SBCC strategies by integrating theoretical frameworks increased significantly, from 3.22 to 4.33.

Program Planning and Evaluation: Skills in designing objectives, logic models, and evaluation techniques improved markedly, with scores going from 3.44 to 4.38.

These outcomes highlight the workshop’s success in strengthening critical SBCC planning and implementation competencies among participants.

Outputs

Validated Measurement Tools: Developed and tested a reliable, theory-based 16-item survey instrument to assess SBCC self-efficacy, demonstrating strong internal consistency (Cronbach’s α = 0.78–0.94) and a process evaluation tool to assess perceived quality of the workshop.

Evidence of Learning Gains: Quantitative data showed statistically significant improvements in participant competencies across all assessed SBCC domains, confirming the workshop’s effectiveness.

Participant Feedback for Improvement: Process evaluation data provided actionable insights into participant satisfaction with content, facilitation, and logistics, supporting continuous refinement of future workshops.

Comprehensive Evaluation Report: Delivered a detailed report synthesizing outcome and process findings, including data visualizations, interpretation of results, and recommendations for program enhancement.

Capacity Building: Strengthened the ability of workshop organizers to use theory-driven evaluation methods and data analysis tools for future SBCC workshop and training assessments.